Cinnamon/kotaemon: An open-source RAG-based tool for chatting with your documents."

url: https://github.com/Cinnamon/kotaemon

date: "2024-08-27 22:24:42"

kotaemon 科塔门

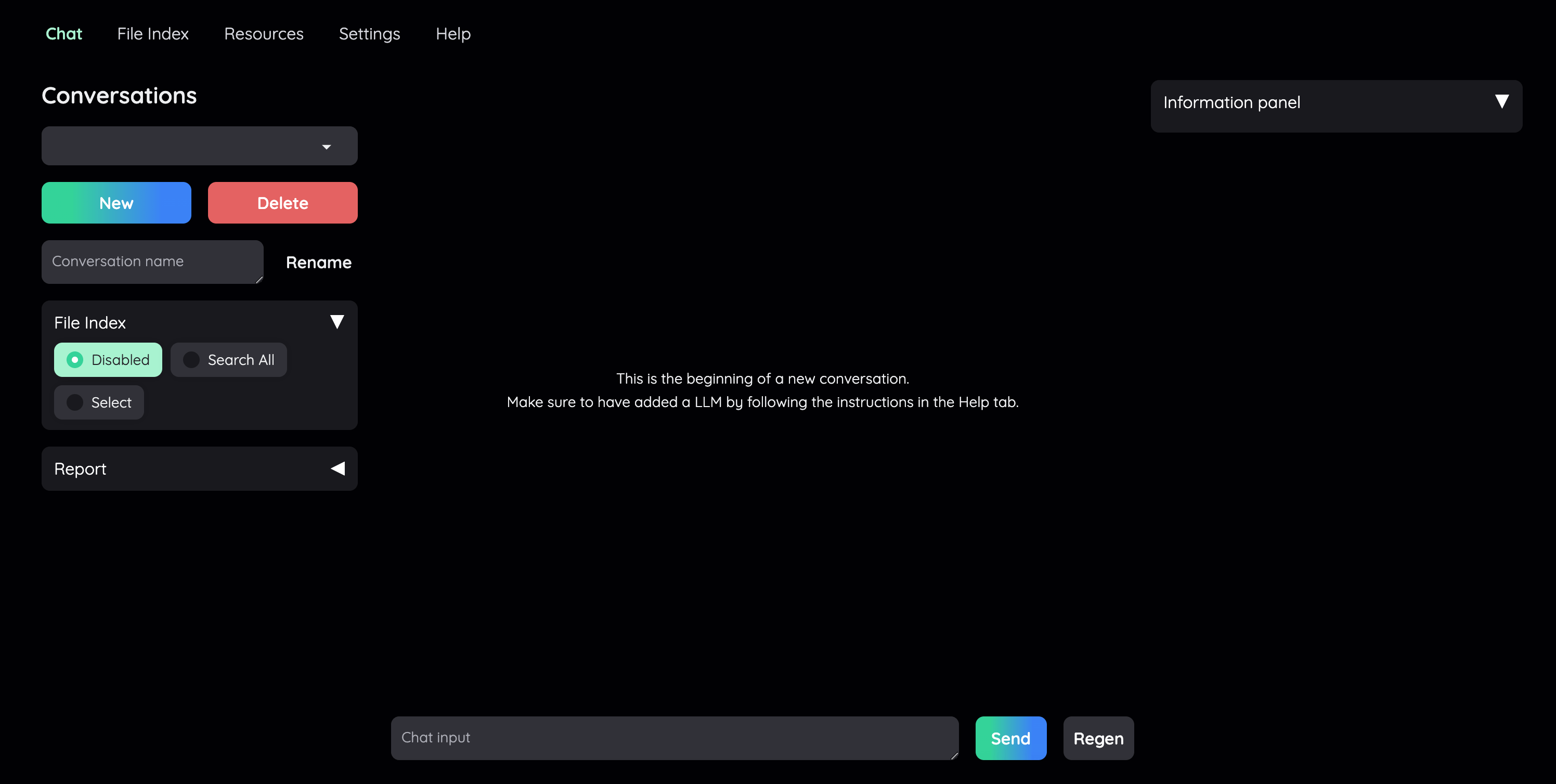

An open-source clean & customizable RAG UI for chatting with your documents. Built with both end users and developers in mind.

一个开源的干净且可定制的 RAG UI,用于与您的文档聊天。考虑到最终用户和开发者。

Live Demo | Source Code

实时演示 | 源代码

User Guide | Developer Guide | Feedback

用户指南 | 开发者指南 | 反馈

Introduction 引言

This project serves as a functional RAG UI for both end users who want to do QA on their documents and developers who want to build their own RAG pipeline.

这个项目为想要在其文档上进行问答的用户和想要构建自己的 RAG 管道的开发者提供了一个功能性的 RAG UI。

- For end users: 为最终用户:

- A clean & minimalistic UI for RAG-based QA.

一个基于 RAG 的问答的简洁且极简主义 UI。 - Supports LLM API providers (OpenAI, AzureOpenAI, Cohere, etc) and local LLMs (via

ollamaandllama-cpp-python).

支持LLM API 提供商(OpenAI、AzureOpenAI、Cohere 等)和本地LLMs(通过ollama和llama-cpp-python)。 - Easy installation scripts.

简易安装脚本。

- A clean & minimalistic UI for RAG-based QA.

- For developers: 为开发者:

- A framework for building your own RAG-based document QA pipeline.

一个基于 RAG 构建您自己的文档问答管道的框架。 - Customize and see your RAG pipeline in action with the provided UI (built with Gradio).

自定义并查看您使用的 RAG 管道在实际操作中的效果,通过提供的 UI(使用 Gradio 构建)。

- A framework for building your own RAG-based document QA pipeline.

+—————————————————————————-+

| End users: Those who use apps built with `kotaemon`. |

| (You use an app like the one in the demo above) |

| +—————————————————————-+ |

| | Developers: Those who built with `kotaemon`. | |

| | (You have `import kotaemon` somewhere in your project) | |

| | +—————————————————-+ | |

| | | Contributors: Those who make `kotaemon` better. | | |

| | | (You make PR to this repo) | | |

| | +—————————————————-+ | |

| +—————————————————————-+ |

+—————————————————————————-+

This repository is under active development. Feedback, issues, and PRs are highly appreciated.

此仓库正在积极开发中。反馈、问题和 PR(Pull Requests)非常受欢迎。

Key Features 关键特性

-

Host your own document QA (RAG) web-UI. Support multi-user login, organize your files in private / public collections, collaborate and share your favorite chat with others.

自建文档 QA(RAG)网页 UI。支持多用户登录,将文件组织在私有/公共收藏夹中,协作并分享您喜欢的聊天内容。 -

Organize your LLM & Embedding models. Support both local LLMs & popular API providers (OpenAI, Azure, Ollama, Groq).

组织您的LLM 嵌入模型。支持本地LLMs 和流行的 API 提供商(OpenAI、Azure、Ollama、Groq)。 -

Hybrid RAG pipeline. Sane default RAG pipeline with hybrid (full-text & vector) retriever + re-ranking to ensure best retrieval quality.

混合 RAG 管道。合理的默认 RAG 管道,包含混合(全文与向量)检索器+重新排序,以确保最佳检索质量。 -

Multi-modal QA support. Perform Question Answering on multiple documents with figures & tables support. Support multi-modal document parsing (selectable options on UI).

多模态问答支持。支持在多个文档上执行问答,并具有图表支持。支持多模态文档解析(UI 上的可选选项)。 -

Advance citations with document preview. By default the system will provide detailed citations to ensure the correctness of LLM answers. View your citations (incl. relevant score) directly in the in-browser PDF viewer with highlights. Warning when retrieval pipeline return low relevant articles.

提前引用并预览文档。默认情况下,系统将提供详细引用以确保LLM答案的正确性。直接在浏览器 PDF 查看器中查看您的引用(包括相关分数)并高亮显示。当检索管道返回低相关文章时发出警告。 -

Support complex reasoning methods. Use question decomposition to answer your complex / multi-hop question. Support agent-based reasoning with ReAct, ReWOO and other agents.

支持复杂推理方法。使用问题分解来回答您的复杂/多跳问题。支持基于代理的推理,包括 ReAct、ReWOO 和其他代理。 -

Configurable settings UI. You can adjust most important aspects of retrieval & generation process on the UI (incl. prompts).

可配置设置界面。您可以在界面上调整检索和生成过程的大部分重要方面(包括提示)。 -

Extensible. Being built on Gradio, you are free to customize / add any UI elements as you like. Also, we aim to support multiple strategies for document indexing & retrieval.

GraphRAGindexing pipeline is provided as an example.

可扩展的。基于 Gradio 构建,您可以自由地自定义/添加任何您喜欢的 UI 元素。此外,我们旨在支持多种文档索引和检索策略。GraphRAG索引管道提供了一个示例。

Installation 安装

For end users 为最终用户

This document is intended for developers. If you just want to install and use the app as it is, please follow the non-technical User Guide (WIP).

本文件面向开发者。如果您只想安装并使用该应用,请遵循非技术性用户指南(进行中)。

For developers 为开发者

With Docker (recommended)

使用 Docker(推荐)

- Use this command to launch the server

使用此命令启动服务器

docker run \

-e GRADIO_SERVER_NAME=0.0.0.0 \

-e GRADIO_SERVER_PORT=7860 \

-p 7860:7860 -it --rm \

taprosoft/kotaemon:v1.0

Navigate to http://localhost:7860/ to access the web UI.

导航到 http://localhost:7860/ 以访问 Web 用户界面。

Without Docker 没有 Docker

- Clone and install required packages on a fresh python environment.

克隆并安装新 Python 环境中所需的包。

optional (setup env)

conda create -n kotaemon python=3.10

conda activate kotaemon

clone this repo

git clone https://github.com/Cinnamon/kotaemon

cd kotaemon

pip install -e "libs/kotaemon[all]"

pip install -e "libs/ktem"

-

View and edit your environment variables (API keys, end-points) in

.env.

查看和编辑您的环境变量(API 密钥、端点)在.env。 -

(Optional) To enable in-browser PDF_JS viewer, download PDF_JS_DIST and extract it to

libs/ktem/ktem/assets/prebuilt

(可选)要启用浏览器中的 PDF_JS 查看器,请下载 PDF_JS_DIST 并将其提取到libs/ktem/ktem/assets/prebuilt

- Start the web server:

启动网络服务器:

The app will be automatically launched in your browser.

应用将在您的浏览器中自动启动。

Default username / password are: admin / admin. You can setup additional users directly on the UI.

默认用户名/密码是: admin / admin 。您可以直接在 UI 上设置其他用户。

Customize your application

自定义您的应用程序

By default, all application data are stored in ./ktem_app_data folder. You can backup or copy this folder to move your installation to a new machine.

默认情况下,所有应用程序数据都存储在 ./ktem_app_data 文件夹中。您可以备份或复制此文件夹以将您的安装移动到新机器。

For advance users or specific use-cases, you can customize those files:

对于高级用户或特定用例,您可以自定义这些文件:

flowsettings.py.env

flowsettings.py

This file contains the configuration of your application. You can use the example here as the starting point.

此文件包含您应用程序的配置。您可以将此处提供的示例作为起点。

Notable settings 显著的设置

# setup your preferred document store (with full-text search capabilities)

KH_DOCSTORE=(Elasticsearch | LanceDB | SimpleFileDocumentStore)

# setup your preferred vectorstore (for vector-based search)

KH_VECTORSTORE=(ChromaDB | LanceDB

# Enable / disable multimodal QA

KH_REASONINGS_USE_MULTIMODAL=True

# Setup your new reasoning pipeline or modify existing one.

KH_REASONINGS = [

"ktem.reasoning.simple.FullQAPipeline",

"ktem.reasoning.simple.FullDecomposeQAPipeline",

"ktem.reasoning.react.ReactAgentPipeline",

"ktem.reasoning.rewoo.RewooAgentPipeline",

]

)

.env

This file provides another way to configure your models and credentials.

此文件提供了一种配置您的模型和凭证的另一种方法。

Configure model via the .env file

通过.env 文件配置模型

Alternatively, you can configure the models via the .env file with the information needed to connect to the LLMs. This file is located in the folder of the application. If you don’t see it, you can create one.

或者,您可以通过 .env 文件配置模型,其中包含连接到LLMs所需的信息。此文件位于应用程序文件夹中。如果您看不到它,您可以创建一个。

Currently, the following providers are supported:

当前,以下提供商受到支持:

OpenAI

In the .env file, set the OPENAI_API_KEY variable with your OpenAI API key in order to enable access to OpenAI’s models. There are other variables that can be modified, please feel free to edit them to fit your case. Otherwise, the default parameter should work for most people.

在 .env 文件中,设置 OPENAI_API_KEY 变量为您的 OpenAI API 密钥以启用对 OpenAI 模型的访问。还有其他可以修改的变量,请随意编辑以适应您的需求。否则,默认参数应该适用于大多数人。

OPENAI_API_BASE=https://api.openai.com/v1

OPENAI_API_KEY=<your OpenAI API key here>

OPENAI_CHAT_MODEL=gpt-3.5-turbo

OPENAI_EMBEDDINGS_MODEL=text-embedding-ada-002

Azure OpenAI

For OpenAI models via Azure platform, you need to provide your Azure endpoint and API key. Your might also need to provide your developments’ name for the chat model and the embedding model depending on how you set up Azure development.

为 OpenAI 模型通过 Azure 平台,您需要提供您的 Azure 端点和 API key. 您可能还需要提供您开发名称以用于聊天模型和 嵌入模型取决于您如何设置 Azure 开发。

AZURE_OPENAI_ENDPOINT=

AZURE_OPENAI_API_KEY=

OPENAI_API_VERSION=2024-02-15-preview

AZURE_OPENAI_CHAT_DEPLOYMENT=gpt-35-turbo

AZURE_OPENAI_EMBEDDINGS_DEPLOYMENT=text-embedding-ada-002

Local models 本地模型

Using ollama OpenAI compatible server

使用 Ollama 与 OpenAI 兼容的服务器

Install ollama and start the application.

安装 ollama 并启动应用程序。

Pull your model (e.g):

拉取您的模型(例如):

ollama pull llama3.1:8b

ollama pull nomic-embed-text

Set the model names on web UI and make it as default.

设置网页 UI 上的模型名称并将其设为默认。

Using GGUF with llama-cpp-python

使用 GGUF 与 llama-cpp-python

You can search and download a LLM to be ran locally from the Hugging Face Hub. Currently, these model formats are supported:

您可以在 Hugging Face 上搜索并下载一个LLM以本地运行 中心。目前,支持以下模型格式:

- GGUF

You should choose a model whose size is less than your device’s memory and should leave about 2 GB. For example, if you have 16 GB of RAM in total, of which 12 GB is available, then you should choose a model that takes up at most 10 GB of RAM. Bigger models tend to give better generation but also take more processing time.

您应该选择一个小于您设备内存大小的模型,并且应该留有 约 2GB。例如,如果你总共有 16GB 的 RAM,其中 12GB 可用, 然后你应该选择一个占用最多 10GB RAM 的模型。更大的模型往往 提供更好的生成,但需要更多处理时间。

Here are some recommendations and their size in memory:

这里有一些推荐及其内存大小:

- Qwen1.5-1.8B-Chat-GGUF: around 2 GB

Qwen1.5-1.8B-Chat-GGUF:约 2GB

Add a new LlamaCpp model with the provided model name on the web uI.

在网页 UI 上添加一个带有提供模型名称的新 LlamaCpp 模型。

Adding your own RAG pipeline

添加您自己的 RAG 管道

Custom reasoning pipeline

自定义推理管道

First, check the default pipeline implementation in here. You can make quick adjustment to how the default QA pipeline work.

首先,检查这里默认的管道实现。您可以快速调整默认的 QA 管道工作方式。

Next, if you feel comfortable adding new pipeline, add new .py implementation in libs/ktem/ktem/reasoning/ and later include it in flowssettings to enable it on the UI.

接下来,如果您觉得添加新管道线很方便,请在 libs/ktem/ktem/reasoning/ 中添加新的 .py 实现,然后将其包含在 flowssettings 中以在 UI 上启用它。

Custom indexing pipeline 自定义索引管道

Check sample implementation in libs/ktem/ktem/index/file/graph

检查 libs/ktem/ktem/index/file/graph 中的示例实现

(more instruction WIP). (更多说明进行中)。

Developer guide 开发者指南

Please refer to the Developer Guide for more details.

请参阅开发者指南以获取更多详细信息。